Week 3: CNN — Convolutional Neural Networks, Cable News Network, or Confusing Noisy Nuisances?

March 15, 2024

Hello my fellow collections of atoms, so uniquely arranged that we classify one another as “humans.” Where were we again? Oh right — asteroids and computer vision and convolutional neural networks!

You might be asking, what really is a convolutional neural network? And I’m here to answer that question — a convolutional neural network is simply a type of neural network that uses convolution layers to process images! Wow, I’m truly an expert.

Neural networks — neurons, brain-emulation, bunch of nodes (circles) with weights (lines) between them, value of a node is the sum of the weights times values of nodes from the previous layer of the network, weights are tuned as the network is trained, eventually result in an output node from the network (that’s hopefully close to the desired output).

Now imagine trying to input a 2D image into the linear input — that’s kinda difficult right? Especially when you’re trying to maintain info like color groups and relative positions.

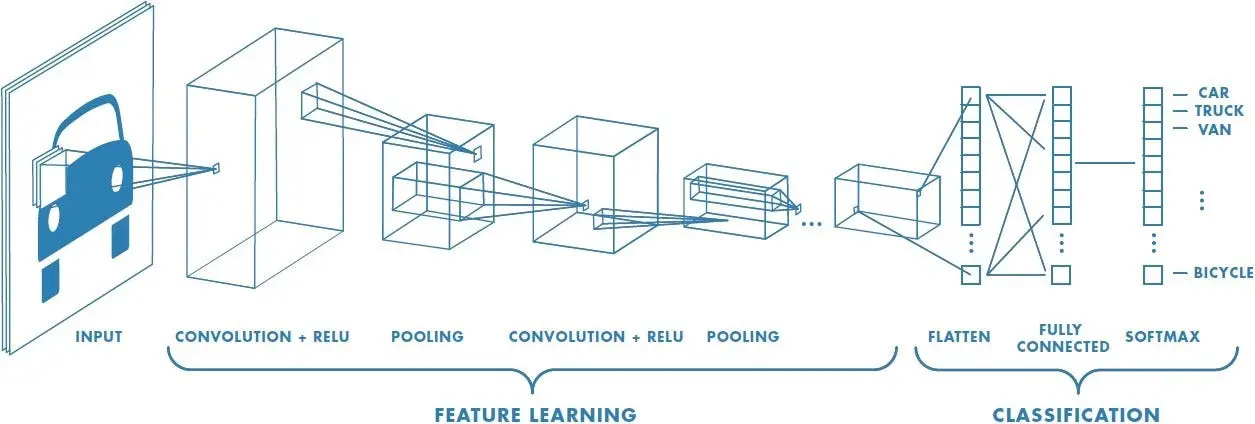

Instead, what if we tack on something to the beginning of this neural network to smash a 2D image down to a 1D array while maintaining most of the important 2D information? We do this by using a “2D weight” between layers instead of the multiple linear connections between the layers in a traditional neural network. These “2D weights” are called filters or kernels. Essentially, they select (or filter) out specific characteristics from an image in its 2D representation and represent it concisely in a more compressed output format (these filters can be adjusted and tuned just like a regular weight). Eventually, this compression simplifies the 2D input matrix into a much smaller 2D matrix (that hopefully, if all went well, contains most of the important 2D information from the original input matrix). This smaller 2D matrix is smushed or flattened and fed in as an input to our traditional neural network. Now why is this method called a convolutional neural network? Because some of filters applied to the input image are called convolution layers (there are other layers called pooling layers but they’re less cool sounding than convolution layers). Here’s a nice diagram:

I know that was a mouthful, and just know that you don’t need to understand any of that to understand what I’ve done in my project.

Now, there’s some real smart computer scientists that made some real good convolutional neural networks for finding some real complex stuff in real high resolution images. The current best-performing models out there are those from the EfficientNet family. These models are able to very accurately complete extremely difficult image classification tasks — computer scientists are sometimes kinda very smart!

But, thing is, the image classification task I’m trying to tackle is not all that difficult, essentially it boils down to “is there a bright line in image?” Hence, the EfficientNet model might possibly (foreshadowing!!) be a bit too bulky and smart to be efficient in detecting asteroids. Imagine using an axe to cut paper (it’ll cut it but it’s not as efficient as using a pair of scissors).

So, I’ve settled on creating a more simple, dumb-downed architecture using the convolution and pooling layers from the popular Keras Library. After some extensive testing, I’ve created a model that is both lightweight and efficient, more efficient than EfficientNet believe it or not! So, in a way, I beat the top machine learning computer scientists in a contest that they didn’t sign up for! But we take the win!

Anyways, that’s about it for this week, I’m gonna be applying the model(s) I have generated to some real data (remember everything that I’ve done so far is on deepfake simulated data) and reporting the results.

It’s been your fellow human being smashing their life away on a plastic keyboard! Until next time, peace!

Leave a Reply

You must be logged in to post a comment.