Week #1: Trying Machine Learning, NAND Flash, and a Whole Lot of Learning!

March 1, 2025

Welcome back to my blog! This week was full of new inspiring concepts about NAND Flash and Machine Learning that I learned, and I’m so excited to share them with you all. My focus during this stage of my project is deepening my knowledge of NAND Flash memory and familiarizing myself with machine learning.

Before I begin, here’s some quick background information that’ll come in handy: NAND Flash is a type of non-volatile memory, which means that it retains data even when power is removed, and it’s widely used in SSDs, mobile devices, and memory cards.

This week, I refreshed my fundamental understanding of NAND Flash memory and deepened my understanding of its internal organization. I learned of two types of NAND, namely raw and managed NAND. While managed NAND has error correction and other data management functions built-in, raw NAND doesn’t. Error correction is a programming technique used to automatically fix mistakes in the NAND Flash memory. I also explored single-level cells (SLC), multi-level cells (MLC), and triple-level cells (TLC). While SLCs have the highest performance, endurance, and reliability, TLCs have the lowest cost but have other tradeoffs such as slower performance and worse endurance. A typical 2 GB NAND Flash Memory structure is called a chip or die, and it contains 2 planes. These planes are made up of 2048 blocks, with 1024 in each. Each block is made up of 64 pages and 2112 bytes, or cells, per page (Shin, 2019). Each page delegates 64 bytes towards error correction code. I found this organization really interesting because I didn’t realize that error correction code was so specific.

Now here comes the more interesting part! The most fundamental concept in my project is understanding that an accurate threshold voltage is crucial for maintaining accurate data! When I approached the section about what operations engineers use to NAND Flash memory performance, I came across a technique called the incremental step pulse programming (ISPP), which precisely sets the cell’s threshold voltage by applying multiple pulses and verifying each time (Micron, 2006). ISPP caught my eye immediately, because this type of programming would be useful when I am coding my program to match each cell’s threshold voltage.

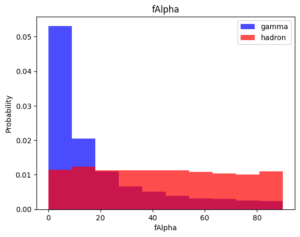

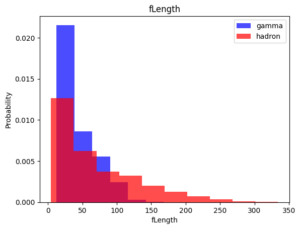

Now here comes the highlight of my entire week: working with the MAGIC Gamma Telescope. I know it sounds random, but the MAGIC Gamma Telescope is one of UC Irvine’s Machine Learning models that I used to predict a type of rock (either gamma or hadron). I worked on supervised machine learning by graphing the given dataset by importing numpy, pandas, and Matplotlib into Python. I also learned to identify the independent and dependent variables and find out the patterns in the dataset that help us predict the result. I also separated the given dataset into three categories: (1) training, (2) validation, and (3) testing data. But when it came to understanding the Standard Scaler, I was stuck. I didn’t understand why I needed to fit my data into a standard scale, or what would happen when I did. As I continue studying and applying machine learning, I am excited to finally understand this concept.

You might be wondering why I’m working with a completely different dataset this week. I’m working with the MAGIC Gamma Telescope to hone my machine learning understanding of fundamentals. After this first week, I’m starting to fundamentally understand connections between machine learning and NAND Flash memory!

This week was full of exciting moments, but my favorite moment was seeing the clean results I got when I plotted 7 graphs of the MAGIC Gamma Telescope with two lines of code. Here are 2 of them!

That’s all for this week! Now that I understand NAND Flash memory more and have gained hands-on experience with machine learning, I realize how important these steps were in shaping my future project. Though I do need to review certain topics more like standard scaling, I am excited to keep exploring and see how NAND Flash and ML connect. Next week, I will continue and wrap up the machine learning example I worked on this week! I hope you enjoyed reading about my first week, and I look forward to seeing you guys again next time!

Leave a Reply

You must be logged in to post a comment.